Our recent research in neurosymbolic systems

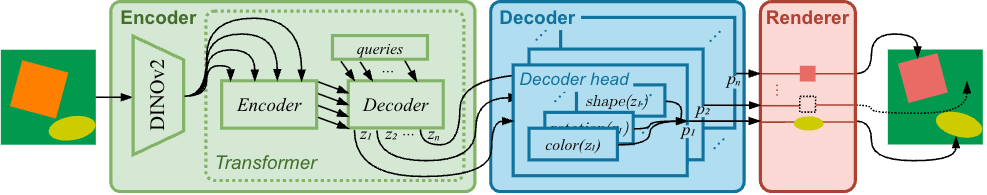

Generative Learning of Differentiable Object Models for Compositional Interpretation of Complex Scenes

Antoni Nowinowski and Krzysztof Krawiec

Accepted at NeurIPS 2025 Workshop on SPACE in Vision, Language, and Embodied AI (SpaVLE)

https://arxiv.org/abs/2506.08191

This study builds on the architecture of the Disentangler of Visual Priors (DVP), a type of autoencoder that learns to interpret scenes by decomposing the perceived objects into independent visual aspects of shape, size, orientation, and color appearance. These aspects are expressed as latent parameters which control a differentiable renderer that performs image reconstruction, so that the model can be trained end-to-end with gradient using reconstruction loss. In this study, we extend the original DVP so that it can handle multiple objects in a scene. We also exploit the interpretability of its latent by using the decoder to sample additional training examples and devising alternative training modes that rely on loss functions defined not only in the image space, but also in the latent space. This significantly facilitates training, which is otherwise challenging due to the presence of extensive plateaus in the image-space reconstruction loss. To examine the performance of this approach, we propose a new benchmark featuring multiple 2D objects, which subsumes the previously proposed Multi-dSprites dataset while being more parameterizable. We compare the DVP extended in these ways with two baselines (MONet and LIVE) and demonstrate its superiority in terms of reconstruction quality and capacity to decompose overlapping objects. We also analyze the gradients induced by the considered loss functions, explain how they impact the efficacy of training, and discuss the limitations of differentiable rendering in autoencoders and the ways in which they can be addressed.

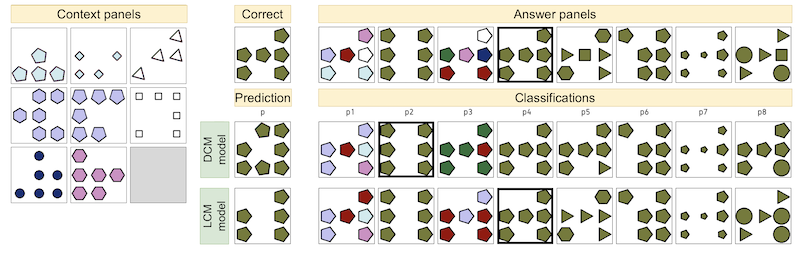

Staged Self-Supervised Learning for Raven Progressive Matrices

Jakub Kwiatkowski and Krzysztof Krawiec

IEEE Transactions on Neural Networks and Learning Systems (2025), pp. 1–13. https://ieeexplore.ieee.org/document/11014503

This study presents and investigates Abstract Compositional Transformers (ACT), a class of deep learning architectures based on the transformer blueprint, designed to handle abstract reasoning tasks that require completing spatial visual patterns. We combine ACTs with choice-making modules and apply them to Raven Progressive Matrices (RPM), logical puzzles that require selecting the correct image from the available answers. We devise a number of ACT variants, train them in several modes and with additional augmentations, subject them to ablations, demonstrate their data scalability, and analyze their behavior and latent representations that emerged in the process. Using self-supervision allows us to successfully train ACTs on relatively small training sets, mitigate several biases identified in RPMs in past studies, and achieve SotA results on the two most popular RPM benchmarks.

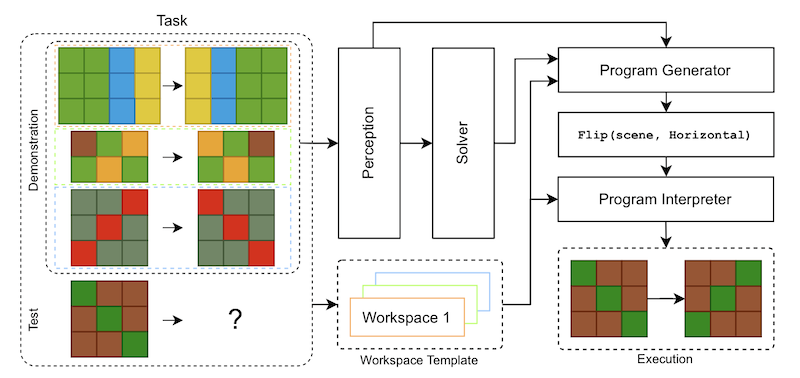

Solving abstract reasoning problems with neurosymbolic program synthesis and task generation

Jakub Bednarek and Krzysztof Krawiec

Neurosymbolic Artificial Intelligence journal (accepted).

The ability to think abstractly and reason by analogy is a prerequisite to rapidly adapt to new conditions, tackle newly encountered problems by decomposing them, and synthesize knowledge to solve problems comprehensively. We present TransCoder, a method for solving abstract problems based on neural program synthesis, and conduct a comprehensive analysis of decisions made by the generative module of the proposed architecture. At the core of TransCoder is a typed domain-specific language, designed to facilitate feature engineering and abstract reasoning. In training, we use the programs that failed to solve tasks to generate new tasks and gather them in a synthetic dataset. As each synthetic task created in this way has a known associated program (solution), the model is trained on them in supervised mode. Solutions are represented in a transparent programmatic form, which can be inspected and verified. We demonstrate TransCoder's performance using the Abstract Reasoning Corpus dataset, for which our framework generates tens of thousands of synthetic problems with corresponding solutions and facilitates systematic progress in learning.

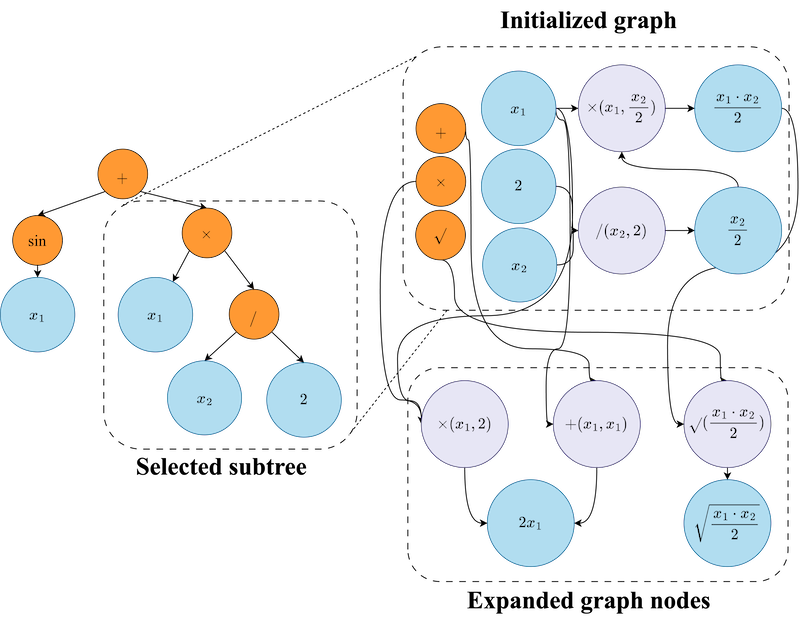

Learning Semantics-aware Search Operators for Genetic Programming

Piotr Wyrwiński and Krzysztof Krawiec

Genetic and Evolutionary Computation Conference, GECCO’25, https://doi.org/10.1145/3712255.3726600

Fitness landscapes in test-based program synthesis are known to be extremely rugged, with even minimal modifications of programs often leading to fundamental changes in their behavior and, consequently, fitness values. Relying on fitness as the only guidance in iterative search algorithms like genetic programming is thus unnecessarily limiting, especially when combined with purely syntactic search operators that are agnostic about their impact on program behavior. In this study, we propose a semantics-aware search operator that steers the search towards candidate programs that are valuable not only actually (high fitness) but also only potentially, i.e. are likely to be turned into high-quality solutions even if their current fitness is low. The key component of the method is a graph neural network that learns to model the interactions between program instructions and processed data, and produces a saliency map over graph nodes that represents possible search decisions. When applied to a suite of symbolic regression benchmarks, the proposed method outperforms conventional tree-based genetic programming and the ablated variant of the method.

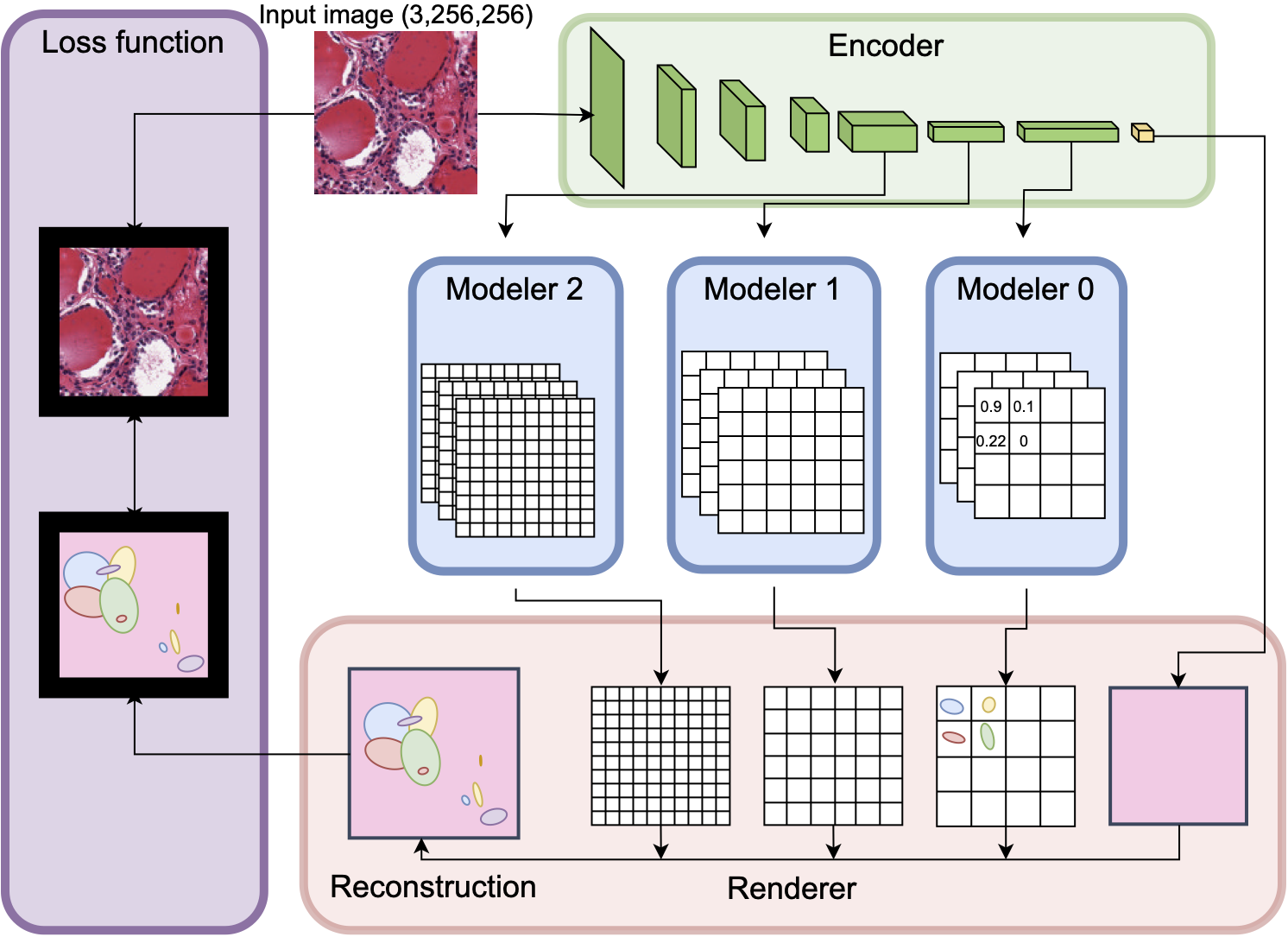

Autoassociative Learning of Structural Representations for Modeling and Classification in Medical Imaging

Zuzanna Buchnajzer, Kacper Dobek, Stanisław Hapke, Daniel Jankowski and Krzysztof Krawiec

https://arxiv.org/abs/2411.12070

Deep learning architectures based on convolutional neural networks tend to rely on continuous, smooth features. While this characteristics provides significant robustness and proves useful in many real-world tasks, it is strikingly incompatible with the physical characteristic of the world, which, at the scale in which humans operate, comprises crisp objects, typically representing well-defined categories. This study proposes a class of neurosymbolic systems that learn by reconstructing images in terms of visual primitives and are thus forced to form high-level, structural explanations of them. When applied to the task of diagnosing abnormalities in histological imaging, the method proved superior to a conventional deep learning architecture in terms of classification accuracy, while being more transparent.

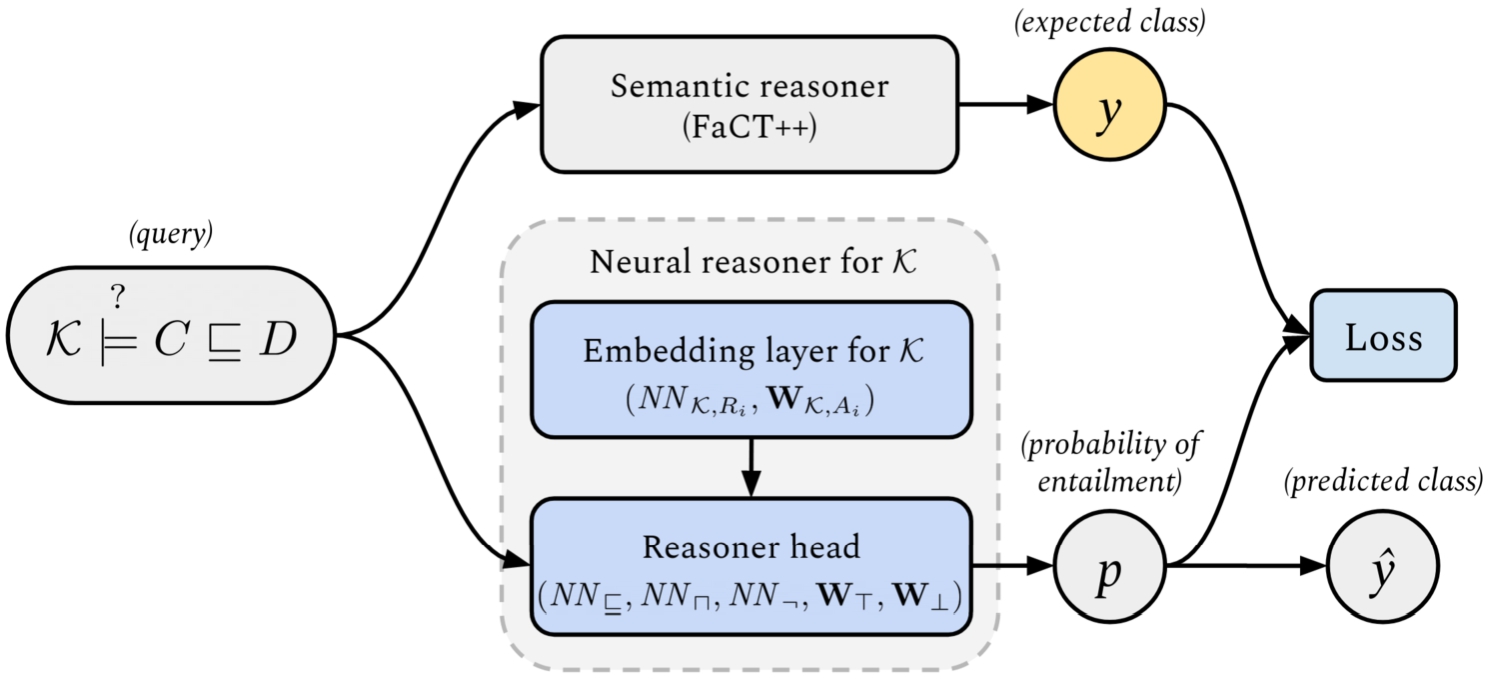

Reason-able embeddings: Learning concept embeddings with a transferable neural reasoner

Max Adamski and Jędrzej Potoniec

Semantic Web - 2024, vol. 15, no. 4, s. 1333-1365 https://journals.sagepub.com/doi/full/10.3233/SW-233355

We present a novel approach for learning embeddings of ALC knowledge base concepts. The embeddings reflect the semantics of the concepts in such a way that it is possible to compute an embedding of a complex concept from the embeddings of its parts by using appropriate neural constructors. Embeddings for different knowledge bases are vectors in a shared vector space, shaped in such a way that approximate subsumption checking for arbitrarily complex concepts can be done by the same neural network, called a reasoner head, for all the knowledge bases. To underline this unique property of enabling reasoning directly on embeddings, we call them reason-able embeddings. We report the results of experimental evaluation showing that the difference in reasoning performance between training a separate reasoner head for each ontology and using a shared reasoner head, is negligible.

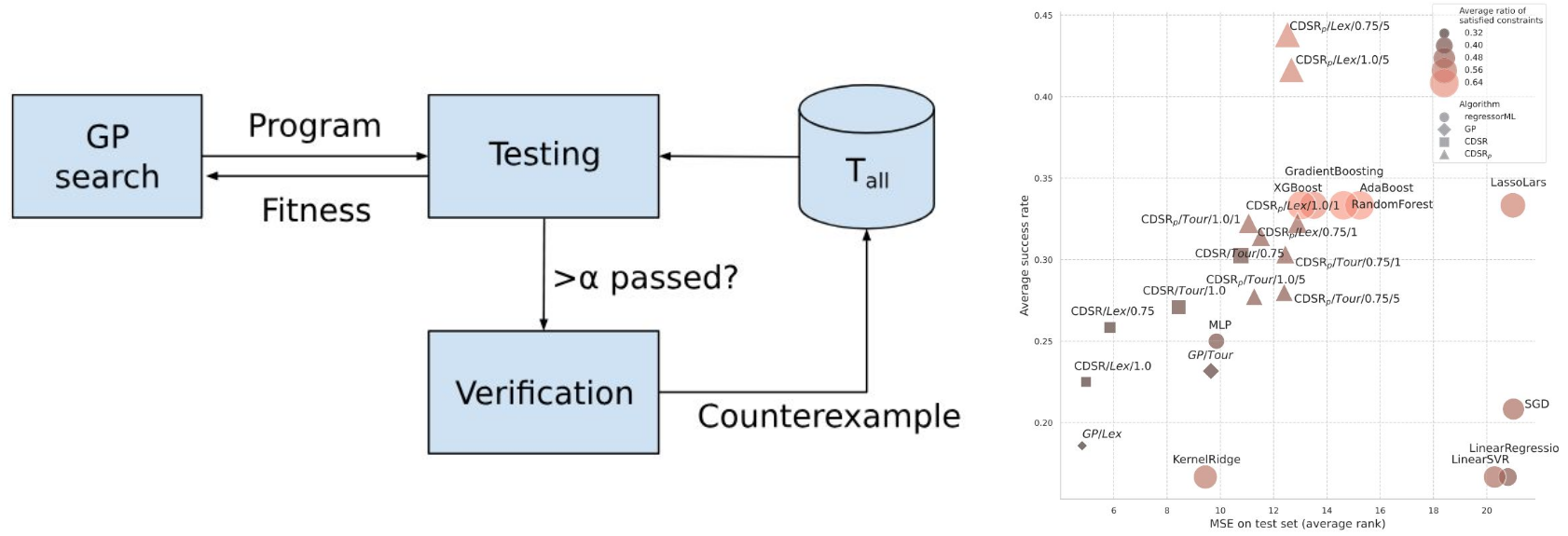

Counterexample-driven genetic programming for symbolic regression with formal constraints

Iwo Błądek and Krzysztof Krawiec

IEEE Transactions on Evolutionary Computation - 2023, vol. 27, no. 5, s. 1327-1339 https://ieeexplore.ieee.org/document/9881536

In symbolic regression with formal constraints, the conventional formulation of regression problem is extended with desired properties of the target model, like symmetry, monotonicity, or convexity. We present a genetic programming algorithm that solves such problems using a satisfiability modulo theories solver to formally verify the candidate solutions. The essence of the method consists in collecting the counterexamples resulting from model verification and using them to improve search guidance. The method is exact upon successful termination, the produced model is guaranteed to meet the specified constraints. We compare the effectiveness of the proposed method with standard constraint-agnostic machine learning regression algorithms on a range of benchmarks and demonstrate that it outperforms them on several performance indicators.

Most of the publications of the team members are available in the SIN system.